At Heartbeat, complete type safety is non-negotiable for us and when we migrated to Prisma a year ago, there were two problems getting in the way of us achieving full type-safety in our database:

- Branded types

- JSON columns

With some creative hacking into Prisma’s internals, we were able to get these critical Typescript features implemented & preserve our end-to-end type safety.

Branded Types

All of the ids in Heartbeat are branded, which means that rather than being typed as just a string, each kind of id has it’s own type. These branded types are defined like this:

export type CommunityID = string & { readonly _: "__CommunityID__" };

export type ChannelID = string & { readonly _: "__ChannelID__" };

export type UserID = string & { readonly _: "__UserID__" };Whenever an id is created for the first time, we cast it as the appropriate type:

const user = {

id: uuidv4() as UserID,

firstName: "Mayhul",

};But after the initial cast, the unique types means that it’s possible to define functions that expect a certain id and will throw an error otherwise:

interface Post {

id: PostID;

createdBy: UserID;

communityID: CommunityID;

//...

}

function getIsUserAdmin(userID: UserID) {

//...

}

function getShouldShowPost(post: Post) {

//If we accidentally call this function with the wrong id, we get a type error

const isAdmin = getIsUserAdmin(post.communityID);

const isAdmin = getIsUserAdmin(post.createdBy);

//...

}Our codebase has 50+ different kinds of ids, so branded types prevent an entire class of bugs from happening that would otherwise be a very easy mistake to make.

The problem is that by default, this branded type gets lost when interacting with Prisma:

schema User {

id String @id

firstName String

}

/*---------------------------------*/

await prisma.user.create({

data: {

id: uuidv4() as UserID,

firstName: 'Mayhul'

}

});

const user = await prisma.user.findFirst({

where: {

firstName: 'Mayhul'

}

});

const userID = user.id; //This is typed as a string instead of UserIDThere’s no way for us to tell Prisma that the type of id is specifically UserID not just string.

Json Columns

The problem with Json columns is more straightforward. By default, there’s no way to define a more precise type for Json columns in a Prisma schema.

schema Event {

id String @id

location Json

}

await prisma.event.create({

data: {

id: uuidv4() as EventID,

location: {

hello: 123

} //Prisma will allow me to put anything here

}

})

const event = prisma.event.findFirst({});

//The type of location here is just object.

//I have no additional context for what's in here

const location = event.location;I know Json columns in SQL can be controversial, but there’s one very simple & common use case that makes us reach for Json columns quite often: Discriminated Unions.

A discriminated union is a data type that can be represented by multiple different kinds of values but can only be one of those kinds at any given time. For example, if we were defining shapes as a discriminated union in Typescript, it might look like this:

type Shape =

| {

kind: "CIRCLE";

radius: number;

}

| {

kind: "RECTANGLE";

width: number;

length: number;

};A key factor here is that each kind of value (Circle, Rectangle) has different fields. In the context of Heartbeat, one example of a discriminated union is an event location. When creating an event in the platform, a user chooses whether that event is happening in a Heartbeat voice channel, in a Zoom meeting or in a custom url. So the discriminated union looks like this:

type EventLocation =

| {

kind: "VOICE_CHANNEL";

voiceChannelID: VoiceChannelID;

}

| {

kind: "ZOOM";

zoomMeetingID: number;

}

| {

kind: "CUSTOM_URL";

url: string;

};The question is, how do you store this data type in a SQL database? The main alternative to a Json column would be to include all of the columns in the table as optional values.

enum EventLocationKind {

VOICE_CHANNEL

ZOOM

CUSTOM_URL

}

schema Event {

id String @id

locationKind EventLocationKind

voiceChannelID String?

zoomMeetingID Int?

url String?

}However with this approach, there are no type-safety guarantees that each locationKind is being inserted with the correct corresponding keys.

await prisma.event.create({

data: {

id: uuidv4() as EventID,

//Inserting a voice channel event with a zoom meeting id is perfectly valid according to Typescript

locationKind: 'VOICE_CHANNEL',

zoomMeetingID: 2453,

}

})With Json columns, assuming there was some way to tell Prisma what the type for a particular Json column should be, we can represent these discriminated unions in the database more naturally.

type EventLocation =

| {

kind: "VOICE_CHANNEL"

voiceChannelID: VoiceChannelID

}

| {

kind: "ZOOM"

zoomMeetingID: number

}

| {

kind: "CUSTOM_URL"

url: string

}

schema Event {

id String @id

//We need some way to tell Prisma that the expected shape of location is EventLocation

location Json

}

await prisma.event.create({

data: {

id: uuidv4() as EventID,

//If Prisma knew that location was supposed to be EventLocation, we would see an error here

location: {

kind: "VOICE_CHANNEL",

zoomMeetingID: 2453,

},

},

})

The problem with both branded types & Json columns is similar. Prisma’s model uses a more generic representation of the type (string and object), when we as the schema definer have a more precise type that we want represented (UserID and EventLocation). So we just need some way to override Prisma’s generic types with our more specific version.

Digging into Prisma

Luckily, the way that Prisma is architected is actually pretty amenable towards making these changes. Prisma uses a codegen step to translate the schema defined in schema.prisma into the Typescript types that are used by the @prisma/client package. Whenever npx prisma generate or npx prisma push is run, this file gets generated: node_modules/.prisma/client/index.d.ts. This file just contains the types used by @prisma/client. Any changes made to this file will effect the compile-time type checking that happens, but will not actually effect any of Prisma’s internal workings.

This is perfect for us! If we can edit this file to include the more strict versions of the types, we can get the type checking that we want without messing with any of Prisma’s actual functionality.

Figuring out how this file was structured was complicated at first. For our Prisma schema, the default index.d.ts that Prisma generates is almost 100k lines long. But after some extensive Ctrl+F work, I found that each Prisma model has the following types generated for it1:

- $UserPayload

- UserWhereInput

- UserCreateInput

- UserUpdateInput

The $UserPayload type is where Prisma defines the return type for each model. So changing $UserPayload would change the type of const user = await prisma.user.findFirst().

UserWhereInput is where Prisma defines the expected type of the where filter.

await prisma.user.findFirst({

where: {}, //This object is expected to match UserWhereInput

});And finally UserCreateInput and UserUpdateInput is where Prisma defines the expected type of data when creating or updating a row.

await prisma.user.create({

data: {}, //This object is expected to match UserCreateInput

});

await prisma.user.update({

where: {

id: userID,

},

data: {}, //This object is expected to match UserUpdateInput

});This is what the default types look like:

schema Event {

id String @id

communityID String

location Json

}

export type $EventPayload<

ExtArgs extends $Extensions.InternalArgs = $Extensions.DefaultArgs

> = {

name: "Event"

scalars: $Extensions.GetPayloadResult<

{

id: string

communityID: string

location: Prisma.JsonValue

},

ExtArgs["result"]["event"]

>

composites: {}

}

export type EventWhereInput = {

AND?: EventWhereInput | EventWhereInput[]

OR?: EventWhereInput[]

NOT?: EventWhereInput | EventWhereInput[]

id?: StringFilter<"Event"> | string

communityID?: StringFilter<"Event"> | string

location?: JsonFilter<"Event">

}

export type EventCreateInput = {

id: string

location: JsonNullValueInput | InputJsonValue

}

export type EventUpdateInput = {

id?: StringFieldUpdateOperationsInput | string

location?: JsonNullValueInput | InputJsonValue

}

Now that we’ve identified this, the next step is clear. All we need to do is swap out id: string with id: EventID and location: Prisma.JsonValue with location: EventLocation.

Defining our Types

The next question is where we should put our type definitions. Right now, we define the schema for our models in schema.prisma which then get code-gened into index.d.ts. If we want to tell Prisma that id: string should actually be id: EventID, we need somewhere to put this information. The most straightforward way we found to do this is to maintain a parallel set of Typescript definitions alongside our schema.prisma file. The way we do it is we have a db-types folder in our codebase which just contains type definitions. Each model in our database is defined both in schema.prisma AND in db-types. For example, if our schema.prisma looks like this:

schema User {

id String @id

communityID String

firstName String

lastName String

}

schema Event {

id String @id

communityID String

location Json

}We would have the following file db-types/types.ts:

export type CommunityID = string & { readonly _: "__CommunityID__" };

export type UserID = string & { readonly _: "__UserID__" };

export type EventID = string & { readonly _: "__EventID__" };

interface tUser {

id: UserID;

communityID: CommunityID;

firstName: string;

lastName: string;

}

type EventLocation =

| {

kind: "VOICE_CHANNEL";

voiceChannelID: VoiceChannelID;

}

| {

kind: "ZOOM";

zoomMeetingID: number;

}

| {

kind: "CUSTOM_URL";

url: string;

};

interface tEvent {

id: EventID;

communityID: CommunityID;

location: EventLocation;

}Our convention is that the type for each database model starts with a lowercase t. So for each model in schema.prisma, such as User, we would expect to have a corresponding tUser type in db-types. The type in db-types is where we define additional information about the model, such as branded types & the expected shape for Json columns. This does require a bit of duplicate work when creating or editing database models, because they need to be updated in both schema.prisma and db-types.

Transferring our Types

Now that we have more precise types defined in db-types, we need some way to have these types represented in index.d.ts. In order to do this, we will essentially be running our own codegen script that runs after Prisma’s. Our codegen script will read from db-types and edit index.d.ts to be in sync. To write our codegen script, we use jscodeshift, a library for writing Javascript/Typescript codemods.

This gist contains a simplified version of prismaSchemaEnricher.ts, which is our codegen script. Essentially, this script is doing the following:

- Read all of the types in

db-types. For each type that starts witht, parse the fields for that type into an array. For every other type, copy it to the top ofindex.d.ts. - For each database model (let’s say we’re doing

tEvent):- Find the

$EventPayloadtype inindex.d.ts. Make sure that every field intEventappears in$EventPaylaod& every field in$EventPayloadappears intEvent. If not, throw an error. This makes sure thatschema.prismaanddb-typesare in sync with each other. - For each field, if the type of that field is either a branded type or a json object, replace the type in

$EventPayloadwith the type intEvent. - Do the same from

EventCreateInputandEventUpdateInput - For

EventWhereInput, replace each field that is a branded string withBrandedStringFilter<[BrandedStringType]>. Replace each field that is a json object withJSONWhereFilter<[ObjectType]>

- Find the

So after this script runs, the types in index.d.ts would look like this:

export type $EventPayload<ExtArgs extends $Extensions.InternalArgs = $Extensions.DefaultArgs> = {

name: "Event";

scalars: $Extensions.GetPayloadResult<

{

id: EventID;

communityID: CommunityID;

location: EventLocation;

},

ExtArgs["result"]["event"]

>;

composites: {};

};

export type EventWhereInput = {

AND?: EventWhereInput | EventWhereInput[];

OR?: EventWhereInput[];

NOT?: EventWhereInput | EventWhereInput[];

id?: BrandedStringFilter<EventID> | undefined;

communityID?: BrandedStringFilter<CommunityID> | undefined;

location?: JSONWhereFilter<EventLocation>;

};

export type EventCreateInput = {

id: EventID;

location: EventLocation;

};

export type EventUpdateInput = {

id?: EventID;

location?: EventLocation;

};All of the non-t types get copied to the top of index.d.ts, so when $EventPayload references EventLocation, that type has been defined earlier in the file. Also copied to the top are the BrandedStringFilter and JSONWhereFilter types. The BrandedStringFilter type looks like this:

type BrandedStringFilter<T extends string> =

| {

equals?: T;

not?: T;

in?: T | Array<T>;

notIn?: T | Array<T>;

contains?: string;

startsWith?: string;

endsWith?: string;

search?: string;

}

| T;This gives us the same string filtering options as before, but makes sure to type check them against the branded string. Thanks to BrandedStringFilter, we’ll get the following type safety guarantees:

function doSomething(communityID: CommunityID, eventIDs: EventID[]) {

await prisma.event.findMany({

where: {

//This will show a type error because EventID is expected

id: communityID,

},

});

await prisma.event.findMany({

where: {

id: {

//This will show a type error because EventID is expected

in: [communityID],

},

},

});

await prisma.event.findMany({

where: {

id: {

//This will pass type-checking

in: eventIDs,

},

},

});

}In general, thanks to the new types that we’ve updated in index.d.ts, we now have all of these amazing type safety guarantees:

function doSomething(eventID: EventID) {

const event = await prisma.event.findUniqueOrThrow({

where: {

id: eventID,

},

});

//The type of location is EventLocation, not object

const location = event.location;

//The type of communityID is communityID, not string

const communityID = event.communityID;

}

function createEvent(communityID: CommunityID, userID: UserID) {

await prisma.event.create({

data: {

id: uuidv4() as EventID,

//This will show a type error because CommunityID is expected

communityID: userID,

//This will show a type error because the voiceChannelID field is missing

location: {

kind: "VOICE_CHANNEL",

},

},

});

}

function updateEvent(eventID: EventID) {

await prisma.event.update({

where: {

id: eventID,

},

data: {

//This will show a type error because of the CUSTO_URL typo

location: {

kind: "CUSTO_URL",

url: "https://meet.google.com/rmx-zagl-dja",

},

},

});

}And with that, we’re done! We now have type safety for branded types & json columns across creations, selects, updates & filtering.

Advanced Filtering

But…if you were paying attention, I still haven’t explained how JSONWhereFilter works. SQL filtering on Json columns can be complicated, and ensuring type safety on these filters is even more complicated. This page contains the full documentation about how to use Prisma to filter on json columns. As a simple example, this is how you check if a certain field in a json column has a certain value:

const zoomEvents = await prisma.event.findMany({

where: {

location: {

path: "$.kind",

equals: "ZOOM",

},

},

});Like before, the problem with this approach is that there’s no type safety. If we had a typo and accidentally passed in $.knid, Prisma wouldn’t catch that. We wouldn’t even get a runtime error. The query would simply return 0 results because none of the location fields have a key called knid.

Our approach for type safety here is to use branded objects. In our codegen script, we tell Prisma that the expected type for Json filters is:

type JSONWhereFilter<T> = object & { readonly _jsonFilter: T };

export type EventWhereInput = {

//...

location?: JSONWhereFilter<EventLocation>;

//...

};This approach is similar to how branded strings work. What we’re saying is that the location where filter is allowed to accept any object, as long as that object has a _jsonFilter key with the type EventLocation. We then have a helper function that generates Json filter object with the _jsonFilter key. The point of requiring the _jsonFilter key is to prevent us from attempting to filter on a json column without using the helper function.

The helper function looks like this:

export function getPrismaObjFilter<

Obj,

Path extends AllPaths<Obj>,

Value extends GetFromPath<Obj, Path> & (string | boolean | number),

>(path: Path, value: Value) {

return {

path: "$." + path,

equals: value,

_jsonFilter: undefined as unknown as Obj,

};

}

const zoomEvents = await prisma.event.findMany({

where: {

//This will show an error because the _jsonFilter key is missing

//We show an error to force using the helper function

//Forcing use of the helper function ensures type-safety is maintained

location: {

path: "$.kind",

equals: "ZOOM",

},

},

});

const zoomEvents = await prisma.event.findMany({

where: {

location: getPrismaObjFilter("kind", "ZOOM"),

},

});

const zoomEvents = await prisma.event.findMany({

where: {

//This will show a type error because knid is not a valid path

location: getPrismaObjFilter("knid", "ZOOM"),

},

});

const zoomEvents = await prisma.event.findMany({

where: {

//This will show a type error because GOOGLE_MEET is not a valid value for kind

location: getPrismaObjFilter("kind", "GOOGLE_MEET"),

},

});The helper function uses the AllPaths type to check that a given string is valid path for a given object. This is a pretty advanced type that even I don’t fully understand the details of. Luckily, I was able to take it from Gabriel, who’s a true Typescript expert.

The _jsonFilter key only exists for type safety reasons and doesn’t actually do anything at runtime, so we don’t actually need to pass in a value. Instead, we can do _jsonFilter: undefined as unknown as Obj to signal to Typescript that this function is good to go while making sure that _jsonFilter has no runtime footprint.

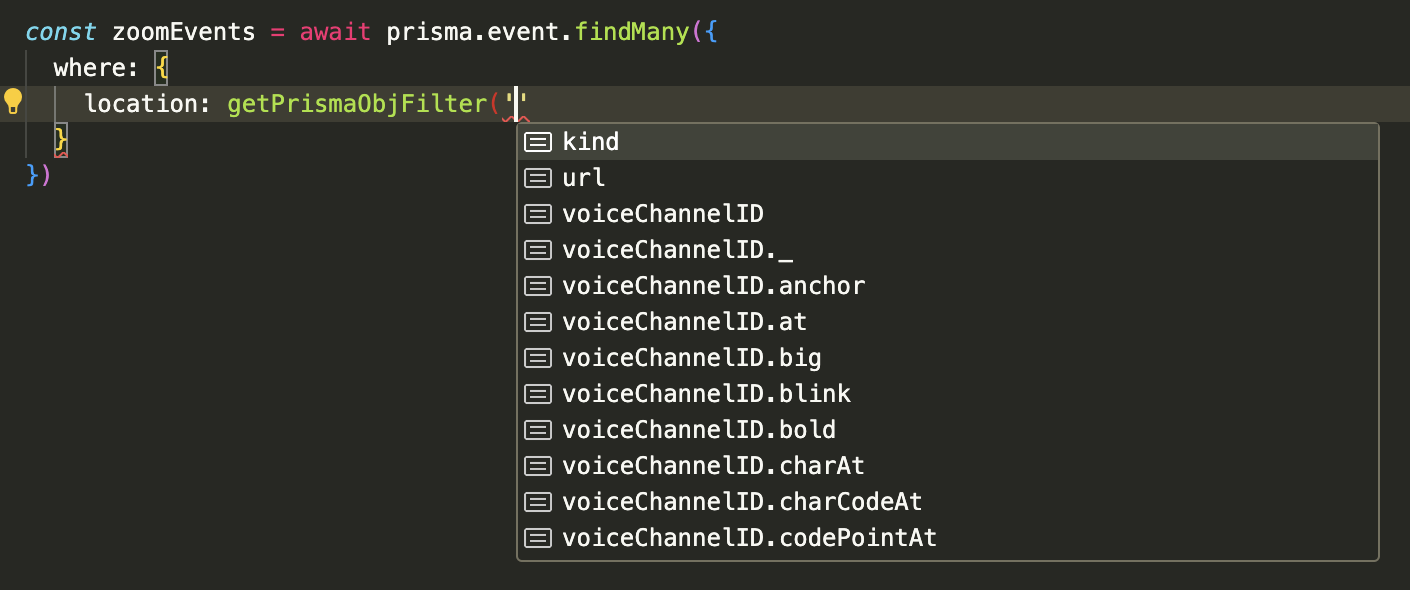

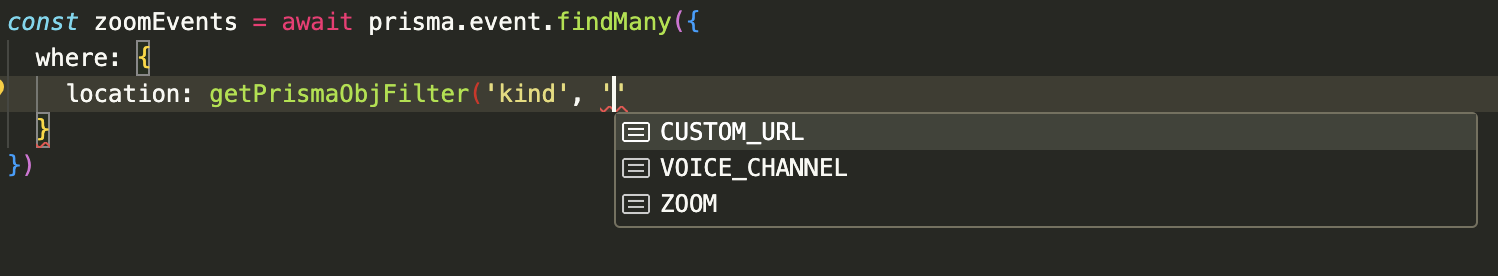

Requiring _jsonFilter to be EventLocation means that type inference works, so we get autocomplete as we type:

Putting it all together

The last step here is actually running the codegen script. In order to do this, we have some simple package.json scripts:

{

"scripts": {

...

"prisma-enrich-types": "npx jscodeshift -t ./tools/prismaSchemaEnricher.ts --extensions=ts --parser=ts './node_modules/.prisma/client/index.d.ts'",

"prisma-gen": "npx prisma generate && npm run prisma-enrich-types",

"prisma-push": "npx prisma db push && npm run prisma-enrich-types"

...

},

"dependencies": {

...

}

}

Instead of running npx prisma db push directly when we want to make a change to the database, we run npm run prisma-push, which runs the Prisma script followed by our codegen script using jscodeshift. Often, we make changes to db-types without making changes to the actual database model. For example, adding a new field to a Json column would involve changing db-types but would not involve any changes to schema.prisma. In this case, we would just run npm run prisma-gen which regenerates the Prisma types without making any changes to our database.

Limitations

Lastly, this approach does come with some downsides that are helpful to be aware of. The big one is that the type safety offered for Json columns is not implemented at the database level. So while our application code has checks in place to make sure that only correctly typed Json is being inserted into our columns, it’s possible for the actual data in the database to go askew. This can happen for a variety of reasons, including manual changes to the database, schemas going out out of sync or un-type checked code being run. The best way to avoid these issues is to only make changes to Json columns via Typescript & to be careful when running migrations.

Because these types are not enforced at the database level, migrations need to be managed a little more hands-on. When adding a new field to a Json column, we usually follow these steps:

- Add the new field as an optional field

- Deploy a change to start writing values to the new field

- Run a script to backfill old rows with the new field

- Make the new field required instead of optional

- Deploy a change to start reading values from the new field

Another issue that can annoying is that Json does not have a native way of storing Datetime. Any dates that get inserted are automatically serialized to strings. So the following error could happen:

schema Event {

id String @id

jsonColumn Json

}

interface tEvent {

id: EventID;

jsonColumn: {

createdAt: Date;

}

}

await prisma.event.create({

data: {

id: uuidv4() as EventID,

jsonColumn: {

createdAt: new Date()

}

}

})

const myEvent = await prisma.event.findFirst({})

//Typescript will allow this

//But it will error at runtime because createdAt is actually a string

const day = myEvent.jsonColumn.createdAt.getDay()The way that we solve this is by requiring all Date types in Json to be unioned with StringifiedDate, another branded type. This requirement is enforced by our prismaSchemaEnricher script. By doing this, Typescript will know that createdAt might be a string and we’ll get a type error unless we parse it back into a date.

export type StringifiedDate = string & { readonly _: "__DATE__" };

interface tEvent {

id: EventID;

jsonColumn: {

createdAt: Date | StringifiedDate;

};

}

await prisma.event.create({

data: {

id: uuidv4() as EventID,

jsonColumn: {

createdAt: new Date(),

},

},

});

const myEvent = await prisma.event.findFirst({});

//Typescript will give us an error because createdAt might be a string

const day = myEvent.jsonColumn.createdAt.getDay();

//Instead we do:

const createdAt = new Date(myEvent.jsonColumn.createdAt);

const day = createdAt.getDay();This solution isn’t ideal & has other potential gaps, but Date values show up rarely in our Json columns anyways.

Updating Prisma is also more complicated with our modifications. Most Prisma versions tend to have slight modifications to how the types in index.d.ts are structured, which means that updating Prisma typically breaks our codegen script. So far, this hasn’t been too big of a problem. The modifications to Prisma’s types are typically small enough that we’re able to make the corresponding updates to our codegen script in less than an hour. It’s possible that future updates to Prisma break our approach at a more fundamental level, but as long as Prisma relies on some form of codegen, I expect this approach to work well.

Overall, despite these downsides, we’ve found the type safety offered by Prisma after these modifications to be invaluable. I’ve yet to see a better way to manage type safety in a SQL database.

Footnotes

-

There are actually a couple more variations of these types for each table, but they all look similar. For example,

UserUncheckedCreateInputandUserCreateManyInputalso exist, but the changes we make to those are the same asUserCreateInput↩